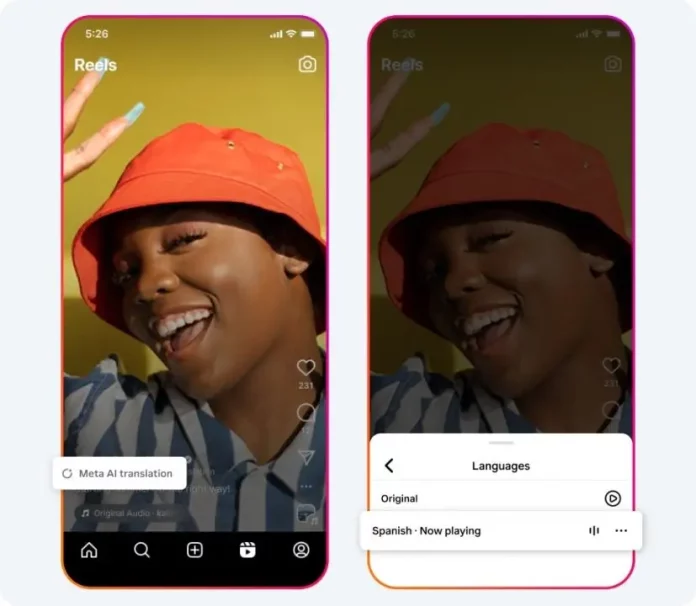

Meta has unveiled a new AI-powered translation feature that brings automatic dubbing to Instagram Reels and other video content across its platforms. This feature uses advanced AI algorithms to not only translate spoken language but also synchronize the dubbing with the speaker’s lip movements, creating a more seamless and natural viewing experience.

With video content being consumed by millions globally, this move aims to break down language barriers and make content more accessible across different regions. The AI translation system can recognize multiple languages and automatically generate a translated version, ensuring that users from various linguistic backgrounds can enjoy the same videos without needing subtitles.

The lip-sync technology is a key highlight of this feature. By analyzing facial movements and speech patterns, Meta’s AI ensures that the dubbed audio matches the original speaker’s lip movements. This makes the translation appear more fluid and reduces the awkwardness often associated with dubbed content.

This technology will be particularly useful for content creators who want to expand their audience reach without going through the laborious process of manually dubbing or subtitling their videos. By allowing automatic translation and dubbing, Meta is offering a powerful tool to make content universally understandable.

As Meta continues to roll out this feature, it’s expected to have a significant impact on how content is consumed on its platforms, potentially making video-sharing more inclusive and globally accessible.